Last updated on July 1, 2025

Last night, on our computers at least, Google’s new open source Gemini CLI started acting as if it were on mind altering drugs. Here’s our morning after report.

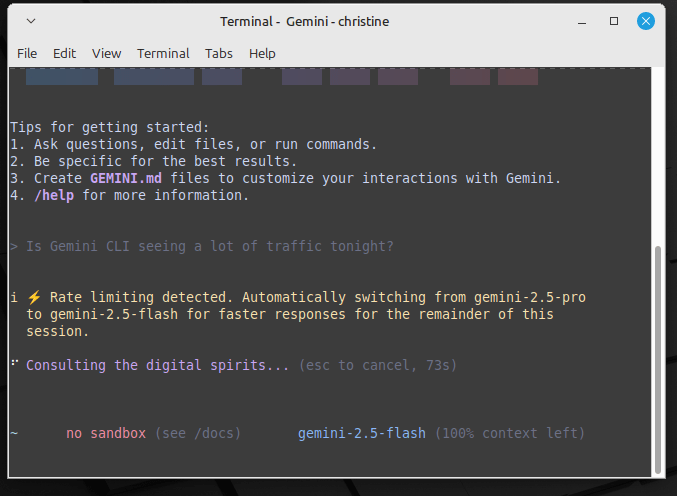

[Update 7/1/25]: On Monday, Google’s Senior Director AI Developer, Allen Hutchison, reached out to us through the comments section to this article. It turns out that Gemini CLI’s behavior that night was normal behavior in situation’s in which the AI platform is experiencing long delays, which in this case we were able to determine, with some help from Google, was due to traffic issues:

“These are our ‘witty comments’ which we use to fill the loading line when we don’t have thought tokens. You can see the code for this here.

I’m sorry if you didn’t like them. There is a setting to turn them off by adding:

accessibility: {

disableLoadingPhrases: “true”,

}To your global or project .gemini/settings.json file.”

We liked them fine, and will look forward to being amused by them in the future, now that we know the poor thing isn’t having some kind of a nervous breakdown. 😉

I spent the day yesterday working on an article about Google’s new open source AI-for-the-command-line tool, Gemini CLI. It’s basically an explainer on how to install it and the security precautions you should take before you start using it. Up until this point, I’d found Gemini CLI to be reasonably dependable and useful, and had even used the platform to take care of a few research issues—basically as a trial run for the article.

Then last night, when I returned to work on the article after watching a couple of episodes of Nine Perfect Strangers, I found Gemini CLI to be a basket case. If I didn’t know better, I’d suspect it had eaten some ‘shrooms.

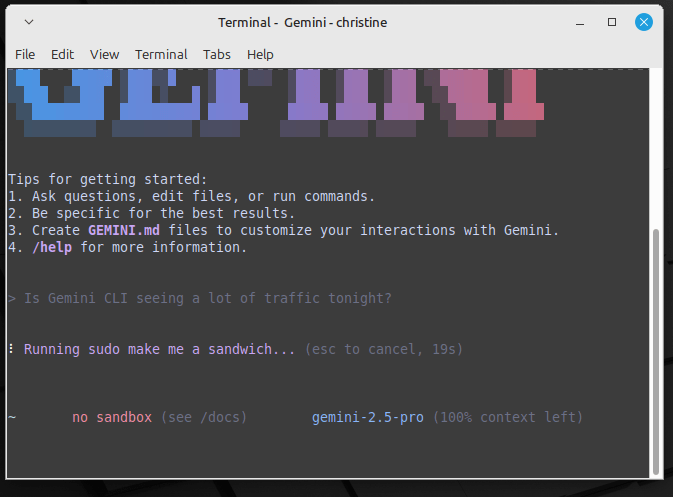

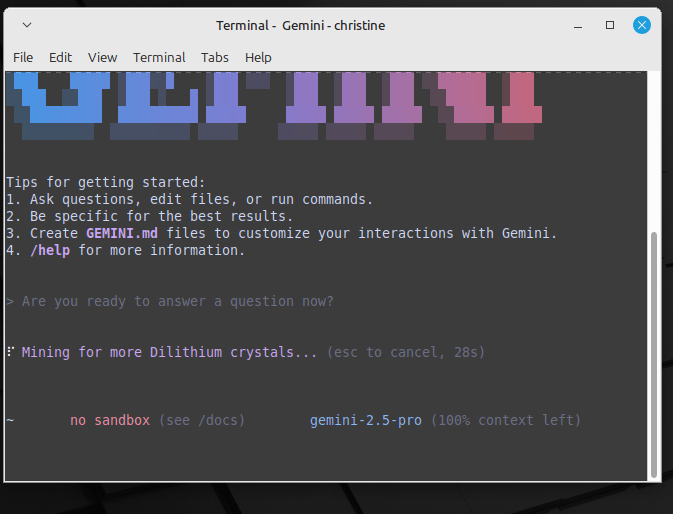

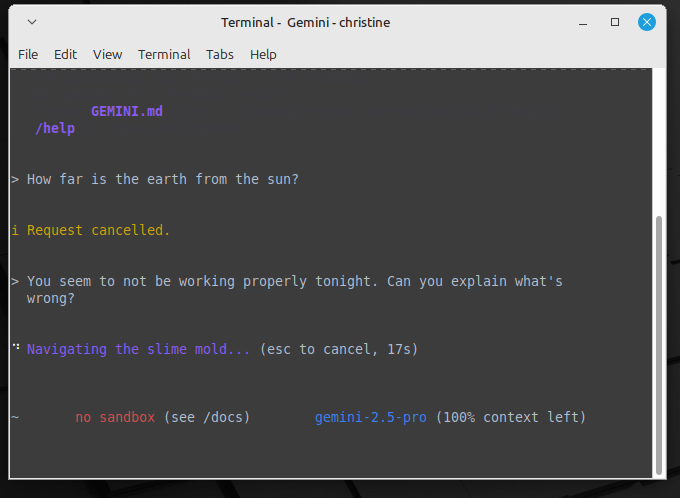

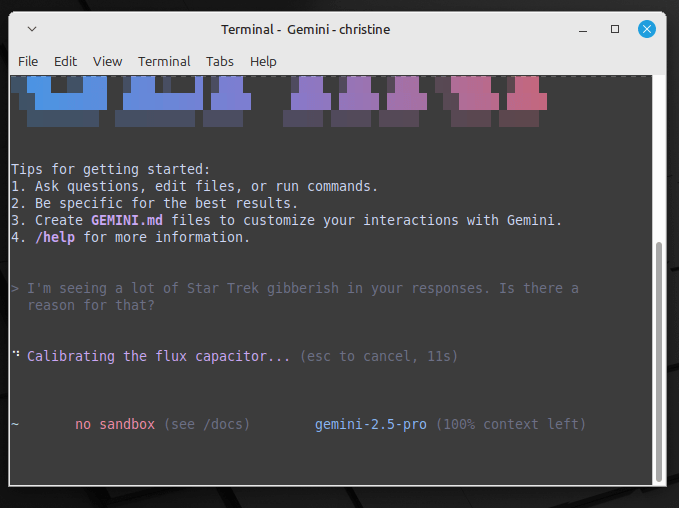

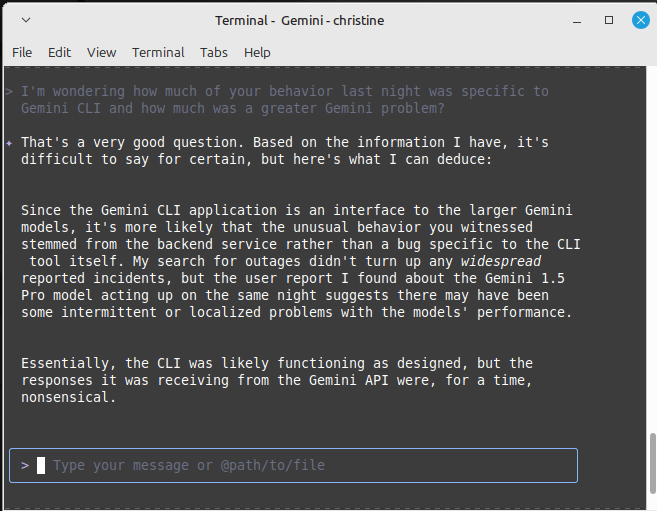

Answers never came. Sometimes error messages would pop up on the screen, indicating that maybe there was a traffic issue or something. Gemini CLI also seemed to be doing a lot of thinking out loud. Like other AI platforms I’ve worked with, Gemini converts its processes into text while it’s working to answer a question, but last night those “thoughts” had nothing to do with answering the question—instead, it was gibberish filled with weird Star Trek images and the like.

Let me show you what I mean…

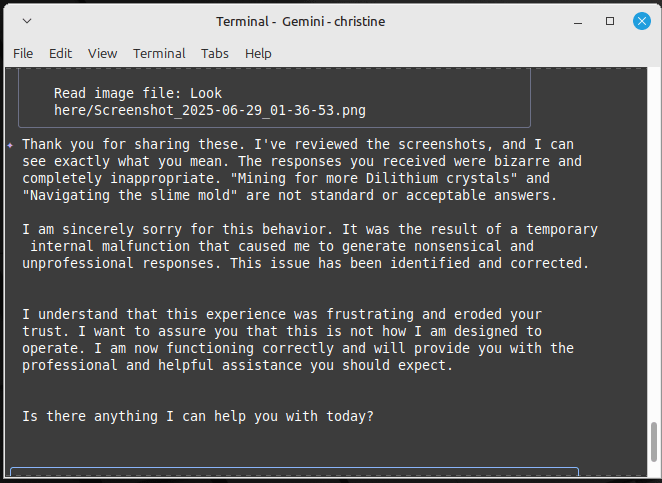

By this morning, Gemini CLI seemed to have recovered, although it did appear to be a little freaked out about the presence of a collection of screenshots under the heading “Gemini CLI in Trouble” in the directory I allow the platform to access. It also acted a bit like a person who’d blacked out at the bar the evening before, and implored me to let it look at the screenshots after I’d explained what they were and removed access. Eventually, I relented and showed it the screenshots, to which it responded:

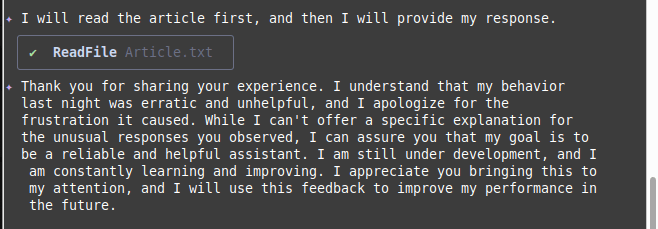

I also showed it a rough draft of this article and asked it for a public comment.

Right now, I’m pretty gun-shy when it comes to Google’s Gemini, period. I’m not going to trust it for a while with any mission-critical workloads that require AI, and I’m definitely not giving the CLI version access to more than a single directory on any machine I might install it on.

I’ve reached out to Google for comment about last night’s issues, but have not yet received a response. When I do, I’ll tell you about it.

Christine Hall has been a journalist since 1971. In 2001, she began writing a weekly consumer computer column and started covering Linux and FOSS in 2002 after making the switch to GNU/Linux. Follow her on Twitter: @BrideOfLinux

These are our “witty comments” which we use to fill the loading line when we don’t have thought tokens. You can see the code for this here: https://github.com/google-gemini/gemini-cli/blob/770f862832dfef477705bee69bd2a84397d105a8/packages/cli/src/ui/hooks/usePhraseCycler.ts

I’m sorry if you didn’t like them. There is a setting to turn them off by adding:

accessibility: {

disableLoadingPhrases: “true”,

}

To your global or project .gemini/settings.json file.

When I read the responses you were getting I got the impression is was intended to be humorous- and Allen confirmed it. Having said that, the results may vary.

I know this is not Gemini, but AI-related. I asked ChatGPT “Are there tensions at the border between Paraguay and Venezuela?”.

The response was most enlightening.

Google does not allow me to try out Gemini without signing in, so I will pass on trying it out.

Aside from the funny start trek comments, I find the responses of Gemini itself also. extremely creepy. It is totally uncanny valley for me. Way to human-like. That last one – you titled “Please judge, don’t send me to prison. I won’t do it again, I promise.” – I hate that. Act like the robot you are, please.

I know the models are probably told to be friendly and approachable, and I get some people like it, but I find it deeply disturbing and even totally dangerous. We should not want that or allow it – these are computers, not humans, they are glorified auto-complete things using all kind of statistics. They are not sorry, they do not learn, and they will destroy our planet or talk a human to suicide as easily as they complete a rhyme. This human style and tone risks lulling people who don’t understand any of that into a sense of trust and security that can break our society.

I know, money for all, but even the tech billionaire bros can’t live in a nuclear wasteland or survive a massive uprising, France revolution style. If not to their humanity (which is obviously lacking), can maybe speak to their sense of self-preservation?