Those who keep up with my posts on Google Plus might know about my month-long struggle with Google Drive. For those who do not, here’s the deal. I split a 200 gig account with my organization Reglue. Half of it I pay for so I have a place to backup my important stuff. The other half is dedicated to files and other documents for Reglue.

I learned a hard lesson in 2011: you can’t back your stuff up too often. Every record we had literally disappeared in a flash during a once-in-a-century lightning storm. Drives on three local computers were fried on the spot.

Our computers were only a portion of the casualty list. The storm also took out two televisions, my entire AC unit and a microwave. The thunder was so close and so powerful it broke out our front bay window and the window glass in two of my trucks. Even top-of-the-line Belkin surge protectors couldn’t stave off these strikes.

Our computers were only a portion of the casualty list. The storm also took out two televisions, my entire AC unit and a microwave. The thunder was so close and so powerful it broke out our front bay window and the window glass in two of my trucks. Even top-of-the-line Belkin surge protectors couldn’t stave off these strikes.

Backups were kept on all three computers, with none existing outside of that office. It was a hard lesson I shouldn’t have had to relearn. It took over a year to try to compile all the statistics lost in that power burst, and even then, most of them are lost forever.

So now, as you can imagine, I live on the paranoid fringe when it comes to backups. Part of that entails all of our Reglue stuff spread across two different Google Drive accounts, two offsite and synced hard drives and a daily backup on a 64 gig thumb drive and a 128GB SSD. That SSD only gets plugged in and mounted during backups. The rest of the time it is put away in a waterproof container.

Let’s talk about that Google Drive account.

On my side of the Drive account I have just over 90 gigs of personal stuff. That includes a 10 gig music collection, my 70 gig video library, my complete graphics and icon libraries that weigh in at 7 gigs. Life was good and the digital things I value were safe.

Too safe it turns out.

I downloaded my music and graphics library on a couple of computers at Reglue just a few days ago. No problems, no hitches, just downloaded zip files that were extracted successfully upon request.

Until recently, when the large downloads failed at the very last byte with the message: “Unknown Network Error.” “Hmmm,” I mused. “Let’s go over to the shop and get these downloads started there.” Nope, same issue.

So the files that I was able to download a short time ago suddenly fail with an unknown network error? On two different networks? That ain’t right. I spent 45 minutes with a Time Warner script reader tech support specialist who said she couldn’t escalate my call due to long wait periods.

Click.

That’s was what it used to sound like when you hung up on someone, which is what I did. I’m old school…that’s just the way I roll. Even though I am a paying customer and am entitled to paid phone support, radiation therapy hosed my voice and I can only speak for maybe 10 minutes at a time. Phone support would have been an exercise in frustration.

After putting up with it for a couple of weeks, I opened a chat tech support ticket on Google Drive Help Desk and ‘splained the problem. An extremely nice tech worked with me for a while and then said that he needed to talk to a couple of his associates and brainstorm with them to see if they couldn’t figure out what was going on.

And just for the record, this is my original request for assistance:

“I have two large files I download on a semi-monthly basis, a 7 gig and a 10 gig file. In the past couple of weeks, these zip files get to the last byte, then it throws an “unknown network error.” I have had friends download the files with varying results. It doesn’t seem to be be affected by ISP, browser or operating system or geo location.

“This problem is reported across Windows, Linux and Mac, with some claiming that cleaning up the (Windows) registry fixed it. That doesn’t apply to me as I am using Linux. Also, I am not using Insync or any syncing tool. I did call my ISP (Time Warner) but couldn’t get past the script reader to any real support. Where do I start looking to alleviate this problem?”

To which I received a promising response:

“Hi Ken,

“Thanks for your patience as we continue to work on resolving your issue. I just wanted to let you know that I’ve escalated the issue and our specialists are working on finding a solution. I’ll get back to you as soon as I receive an update.

“In the meantime, please feel free to reply if you have further questions.

“Thanks again for contacting Google Support!

“The Google Support Team”

Okay, this sounds good. Especially the part about escalating the problem. This should be fixed shortly…right?

That prompted me to gather the results from a couple dozen G+ friends who tried to download the 9.7 gig music zip file and posted back their results in the Google Plus discussion. This is the cross section of those results I sent back to my tech support guy:

“Download results for 10 gig music.zip file on Google Drive:

“All failures happened at the very last byte of the download then indicates ‘unknown network error.’

Location ISP Result Browser/Platform Colorado Springs, CO Comcast Success Firefox/Linux Round Rock, TX Time Warner Failure Chrome (no extensions)/Linux & Firefox & IE/Windows 7 Bremen, Germany Unknown Success Firefox/Linux Perth, Australia Unknown Success Chrome (incognito)/Linux Austin, TX Time Warner Failure IE9/Windows 8 (no firewall or AV) Columbus, OH Comcast Failure Chrome (no extensions)/Linux & Safari/Mac Bamberg, Germany Kabel Deutschland Success Firefox/Linux San Angelo, TX Comcast Failure IE/Windows 7 (with & without AV) Tampa Bay, FL XFINITY Failure Chrome/Linux Columbus, TX GlobalNet Failure IE/Windows 7 West, VA Suddenlink Failure Chrome & Firefox (with & without extensions)/Linux

Just to make the whole thing more confusing, a 5 gig ISO file downloaded just fine from the Drive account of my buddy +Randy Noseworthy.

I was sure that this information might be helpful in at least starting to explore the problem. I did receive a reply fairly soon:

“Hi Ken,

“If the .zip file doesn’t contain any sensitive data, would you be able to create a copy and share the item with me? I’ll attempt to replicate the issue on my end. If possible, please create a copy and share the item with Mrtechguy@gmail.com>”

And I did just that. Happiness welled from within as I anticipated a solution to this problem. Giddiness and thoughts of celebratory intake of various beverages seemed on the horizon. This problem had been bugging me a lot. Unfortunately, all of the warm fuzzy feelings were killed in a single deadly shot.

“Hi Ken,

“I noticed that the zip file you just shared was 9.7GB. This actually exceeds the download limitation through the web browser, which is why there is an error with this one in particular. The download limit through the web version of Drive is actually capped at 2GB per download.

“Was there another zip file (less than 2GB) that is causing the same error?”

Uh, really? Didn’t I mention above that the problem was with a 10 gig file? Didn’t he see in the posted results that others were able to download the file just fine? Hold on just a second. Let me go see. Feel free to talk among your selves for a bit.

OK, I’m back and I just checked. I most certainly did mention that one of the files causing this trouble was 10 gigs. So we’ve exchanged what…two or three emails since and you didn’t understand the actual problem?

My earlier attempts to download didn’t have any size limitations. Some of my fiends downloaded the file multiple times on multiple browsers on multiple operating systems to make sure it wasn’t a software problem. Why didn’t their downloads fail at the last minute?

And yes, I am aware of Grive and that I can use it to bypass the browser limitations. That’s not the point. Some can get a complete download via their browsers and some cannot. I want to know why. I wanna be one of those people that can. Besides that, we have directors and some of our Reglue kids who access several ISO files larger than 2 gigs. I’m not going to ask them to use the command line when there is obviously a easier way to do it. Not when it seems that the browser method can be successful, albeit sporadic.

Tech support did offer me this “solution.”

“Hi Ken,

“Thanks for getting back to me so quickly!

“You should be able to download the files in smaller batches (less than 2GB) without any issues.

“Another option would be to use another service called Google Takeout. This service allows you to create an archive of your data and then download it locally to your computer. To my knowledge, using this option does not have a size limitation.”

I tried it. It does have a size limitation, but a bit differently than what we are talking about. The music.zip file failed with a message saying: “This file is too large for your computer.” What? I am downloading this file to a partition that has 700GB of free space. Holy friggin’ cow. Really Google? The file is too large? You gotta give me something better than that. At least let me operate with the illusion that you know what you are talking about here.

But here’s what should be glaring back at everyone reading this. The information provided above says that a number of people were able to download the file(s) on multiple browsers across multiple operating systems. When I present these facts to support, why is everyone ignoring that fact?

No, it’s not a two gig file size limit. Last month I downloaded this very file a number of times. There are a good number of people who were able to download this file just a day or two ago. Why didn’t they get an unknown network error? Why was a ten gig file allowed to download in other instances? Don’t you want the answers to those questions? Aren’t you the least bit interested in why this happened? Tap, tap, is this thing on? Anyone here? Anyone?

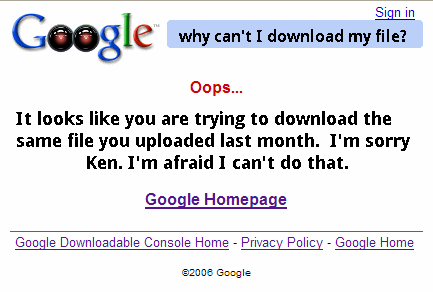

At the risk of sounding whiny, in what universe does any of this make sense? Here’s an idea Google…. Well, wait. Before I give you the idea, let me get this out of the way.

You’re fired.

Google Drive has been a pain in my, uh, backside since day one. The fact that you refuse to create a Linux client for Drive should have been a warning. I have no use for a paid subscription that dictates what file size and format can be downloaded without altering it.

So here is the idea–I’ll even waive my consultation fee. When someone is uploading a folder or file that will have to be broken down in order to download it again, wouldn’t a nice little balloon popup informing the user of that be in order? I mean, I’m not a multi-billion dollar enterprise with more money than God. I’m just a guy that added to those billions because I wanted to support you. I now see where I screwed up.

I assumed that part of that deal was that you were going to support me back. Silly me. I assumed the file I am downloading would be just like the one I uploaded. It stands to reason that if the user is going to be asked to download a file that is different from the one he uploaded that might be a good piece of information to pass along.

Ya think?

Then again, when you get so big that your customers are reduced to help desk ticket numbers or statistics in ad revenue matrices, treatment like this is inevitable. But all is not lost. Your tech support guy turned me on to Google Takeout. Now I know how to get my files back before we cancel our subscription.

And the Tech Support Guy? He’s a great guy and it was obvious that he wanted to help. He’s working in handcuffs, confined by the guidelines of Google Support. I’ve personally been fired for deviating from a tech support script, so I know. This has nothing on him personally. I thank him for his time. I just feel bad for him.

Ken Starks is the founder of the Helios Project and Reglue, which for 20 years provided refurbished older computers running Linux to disadvantaged school kids, as well as providing digital help for senior citizens, in the Austin, Texas area. He was a columnist for FOSS Force from 2013-2016, and remains part of our family. Follow him on Twitter: @Reglue

This is not to excuse Google. They don’t deserve our support as they don’t support us. They exploit Linux and FOSS, the support they give back is only what furthers their ability to further exploit Linux and FOSS. So long as they don’t see a need to support Linux desktops they wont.

It’s not unusual that tech people ignore pertinent data, especially where that data goes against a pre conceived conclusion, I’ve done, I’ve had to fight tooth and nail to get others to accept that there is information they are ignoring. It happens.

The biggest problem with this happening over the phone or via chat or email is it’s all but impossible to get people to go back and re read the previous email… and recognise that they are ignoring information. It’s frustrating. Many’s the time I’ve had to wait patiently while a tech on the other end explains to me how to fix the wrong problem, so that at the end I can say “yes, but that doesn’t explain/fix …”, then have to go over the issue once again, before finally, sometimes, getting them to actually run the tests I want them to run, and finally get the problem solved.

Yes I’m fortunate, I get real 24/7 tech support with my business account, not script readers. But sometimes it does take real work to get that support.

Basically, Ken, you are caught up in the classic Catch-22 situation. You’re d4$$ed if you do and d4$$ed if you don’t.

You’ve been d4$$ed by that bolt of lighting that ruined your backups, and then by discontinuing local backups and relying on the almighty Google, your download file-restore efforts are also d4$$d. A no-win situation!

(Ironic that googlesyndication.com is trying to run a js/cookie even as I write this!)

“and then by discontinuing local backups and relying on the almighty Google…”

No, I did not discontinue local backups…

“I live on the paranoid fringe when it comes to backups. Part of that entails all of our Reglue stuff spread across two different Google Drive accounts, two offsite and synced hard drives and a daily backup on a 64 gig thumb drive and a 128GB SSD. That SSD only gets plugged in and mounted during backups”

I believe 64 gig thumb drive and a 128 gig SSD qualify as “Local”.

Just sayin’….

”message saying: “This file is too large for your computer.” What?”

Uh, this made me think about RAM, tmp, swap, /tmp, even /var/tmp/ & etc. Where is the file saved temporarily while downloading? Are you sure there is enough space in that place to handle it? Or where is the browser checking if there is enough space, /tmp?

Although I do notice all the failed downloads are inside US (albeit one success) so would point to some network issue somewhere “locally” in US 😉

I would imagine that any limitation on file size download has been put in place to appease the MPAA or other DMCA fanatics. If you have large file backup needs, I would highly recommend purchasing a 2.0TB external USB hard drive and a relatively cheap wireless router with NAS capability. At the end of a day, you plug the USB external drive into the router and run your backup plan whatever that may be. Then disconnect the drive. All of the on-line services are subject to secondary lawsuit effects, and/or DMCA takedown notices, and/or ISP blocks, and/or random data theft, and/or random search by any government agency.

I realize this isn’t likely the case, but if the failed download can be retrieved (except for the last byte), then as a workaround you could always pad your files with an extra byte. 😉

A way around the file size download limit would be to use split the large files into several smaller ones. Nearly all compression programs have the option to generate volumes of a particular size. Then generate parity files using par2 or something similar. You upload the backup zip file volumes, and keep the par files locally. However, the question remains, “Why would that be necessary in the first place?”

retf,

Do you use parity files? I really liked the idea when I first heard about them, however in practice I found them too slow/cumbersome to generate if you have lots of data (100’s of Gigs). I finally gave up on using them, but I’d try again if there were a better way.

Nowadays I use btrfs because of the built in checksums for my files. It can’t recover data the way parity files can, but when combined with regular backups I can be reasonably sure my data isn’t subject to bit rot.