You maybe haven’t thought about it, but every time you ask your favorite generative AI platform a question, you’re pumping greenhouse gases into the atmosphere.

Did I tell you yet that these days I refuse to do anything that might put money into Elon Musk’s pocket. I’m also currently in the process of deGoogling my life. I mention this because these things are in conflict with my efforts to do what’s right for the environment.

It seems that a study has been released that looks into the CO₂ emissions of various AI models. It turns out that the least emissions, by far, are produced by Grok AI, which is owned by xAI, the company Elon Musk started to develop an AI model to compete with OpenAI’s ChatGPT. If that wasn’t bad enough, the closest any of the others came to that was Google’s Gemini AI platform.

The study, conducted by TRG Datacenters, a Houston-based colocation operator with data centers in Houston, Dallas, Austin, and Atlanta, evidently using information based on the energy consumption of servers in its data centers, determined that Grok AI creates a piddling 0.17 grams of CO₂ per query. Gemini came in at 1.6 grams.

That’s all well and good, but hell no to president unelect Musk and his attempts to screw with Social Security and pollute the night sky for the sake of latency-ridden internet connections, and to Google’s nose in every aspect of my private business.

“As AI adoption continues to rise, finding ways to reduce its energy consumption will be key,” a TRG spokesperson said about the study. “Some models are already designed to be more efficient, but there is still room for improvement.”

What Can a Gram of CO₂ Do Anyway?”

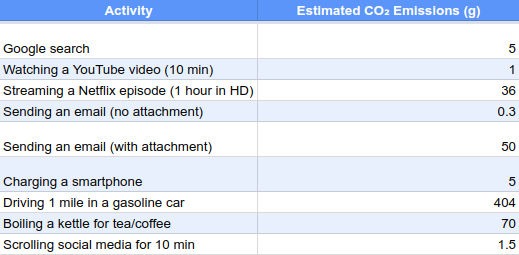

Because most of us have absolutely no idea how a gram of carbon emissions equates to the cost of tea in China — or anything else, for that matter — the folks at TRG compared their figures to everyday digital activities like email, video streaming, and smartphone charging.

For example, the 0.17 grams of CO₂ that Grok AI kicks into the air after you ask it a question turns out to be just a little over half of the CO₂ emissions that result from sending an email with no attachment, and the 1.6 grams created when Gemini is asked a question creates just a little more than is created by scrolling social media for 10 minutes.

Speaking of Gemini, TRG’s researchers said: “The model benefits substantially from Google’s specialized AI hardware infrastructure and significant investments in renewable energy. Despite being significantly more carbon-intensive than Grok, Gemini operates at less than half the emissions of most competitors, highlighting Google’s focus on computational efficiency.”

Just for reference, according to Perplexity — which turns out to not be very environmentally friendly according to this study — a 12-ounce can of soda contains about 2.2 grams of dissolved CO₂.

Bubbling Beneath the Top 2

There are four other AI brands on TRG’s list, all having larger environmental footprints than Grok or Gemini:

- Llama ranks third, releasing 3.2 grams of CO₂ per query, which is equivalent to sending about 10 regular emails without any attachments. “Meta’s renewable energy commitments contribute to Llama’s relatively better performance compared to some competitors,” TRG said. “The carbon footprint assessment identifies a concerning trend of increasing energy demands as Meta expands its AI operations, potentially affecting future emissions profiles.”

- Claude AI, which comes from Anthropic, a company that was founded in 2021 by former members of OpenAI, comes in fourth with 3.5 grams CO₂ emissions per query. The researchers point out that it’s somewhat impressive that Claude is more energy efficient than both Perplexity and ChatGPT, considering that the platform is designed to prioritize safety and reliability, which increases computational demands.

“The model represents a middle ground in the emissions spectrum, balancing performance capabilities with moderate environmental impact,” TRG said. Claude’s carbon cost equals streaming a YouTube videos for 35 minutes.

- Perplexity AI, the platform I’ve been using lately, takes fifth place with 4 grams CO₂ per query. “The model’s integrated search capabilities contribute to its higher energy consumption profile,” TRG said. “Emissions vary based on query complexity, with more elaborate searches driving higher carbon costs.” The data center company went on to explain that Perplexity’s numbers demonstrate how adding functionality beyond basic text generation increases environmental impact. Each Perplexity query produces about the same amount of carbon as sending 13 emails.

- ChatGPT (GPT-4) with 4.32 grams CO₂ per query has the highest emissions of any of the platforms the study considered. “As one of the most computationally intensive models studied, GPT-4 requires substantial processing power to generate responses,” researchers said. “Its deep learning complexity creates an environmental cost 25 times greater than the most efficient competitor, highlighting the significant sustainability challenges facing advanced AI systems.” According to the study, a GPT-4 query generates nearly as much carbon as a full phone charge.

“Advances in hardware, more optimized AI models, and increased use of renewable energy in data centers could help lower emissions over time,” the TRG spokesperson added. “AI is here to stay, but balancing innovation with sustainability will be essential in minimizing its environmental impact.”

Christine Hall has been a journalist since 1971. In 2001, she began writing a weekly consumer computer column and started covering Linux and FOSS in 2002 after making the switch to GNU/Linux. Follow her on Twitter: @BrideOfLinux

Looks like the old Elon is a bit of a greenie at heart. Or maybe his engineers are working to undermine him.

I’m confused — the table shows that google search costs 5g CO2 per search… isn’t that more than any of the AIs? Everyone’s saying that the computationally intensive AIs are way more carbon intensive.