While word on the street is that AI workloads are sending server rack densities sky high, Uptime’s 2024 study says it ain’t so… not yet, anyway.

This is the second of a six-part series focused on Uptime Institute’s Global Data Center Survey 2024.

A big takeaway from Uptime Institute’s Global Data Center Survey 2024 is that a big uptake in server rack density that’s going to take data center power consumption to the point of necessitating something akin to onsite nuclear generators isn’t happening. In fact, when we look at the numbers sever rack density is on the uptick, but not by nearly as much as press reports on power hungry data centers running AI workloads might suggest.

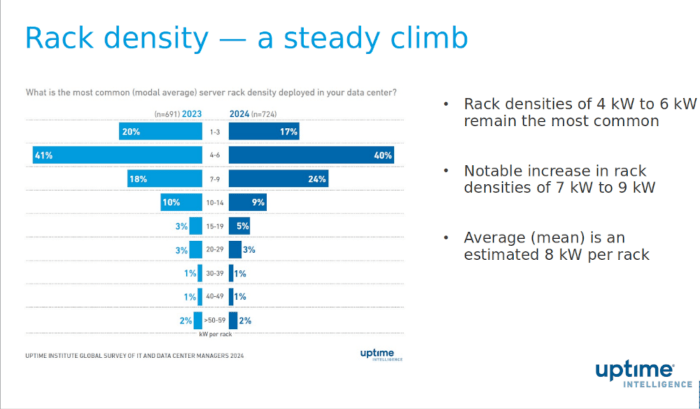

“Most operators don’t have a single rack above 30 kilowatts,” Uptime’s research director Daniel Bizo pointed out in an online briefing on the survey. “That’s a good reminder, a good reality check, that even though there’s a lot of talk in the past 18 months about fairly steep density jumps, and in some cases, extreme densities, approaching 100 kilowatts, we we don’t really see that. We don’t really pick up on that in the in the survey.”

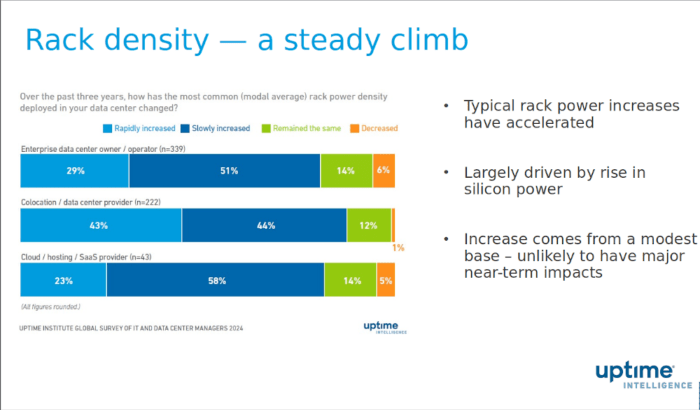

Indeed, across the three main data center types — enterprise on-premises, colocation, and cloud — about half of all data centers on average report that server rack density is slowly increasing, compared to less than a third that report a rapid increase. Similarly, 40% of data centers report that their most common rack density is in the rather modest 7kW-9kW range, although this year, at 25%, there was a notable uptick of data centers reporting an average density in the 7kW-9kW range. Only 10% of all data centers reported an average rack density at 15% or above.

“Where is AI? Where are all those AI training clusters going?” Bizo asked. “Those end up in high concentration in a relatively few sites — so few facilities. For the typical operator, most enterprises, most colocation providers, and even most IT services providers don’t have extreme densities in their data centers, and even if they have some high density racks, what we see is that those are oddities, as opposed to large pieces of infrastructure.”

Adjusting to Higher Densities

That being said, data center densities will continue to rise, which will necessitate developing new ways to cool equipment and to get power to racks. Uptime’s CTO, Chris Brown, pointed out, that data center operators have had to make similar adjustments in the past.

“Twenty years ago we used to distribute low voltage, below 600 volts, produce it at generators and distribute across data centers,” he said. “And then we got to the point where data center density got so much that that was unfeasible. We now, in larger data centers, distribute medium voltage across more of the data center, but still low voltage from the UPS out, and I think what we’re going to be looking at over the next few years, if this rack density for AI plays out and there are large installations of these ultra high density racks, we’re gonna have to really rethink how we distribute power in a data center, just for the sheer fact that things are gonna get really large, really quick.”

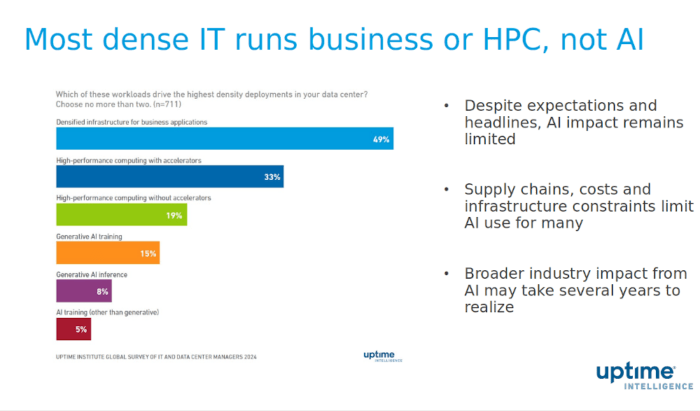

While AI is getting the lion’s share of attention when it comes to increasing density in data centers, the survey indicates that AI is not the main driver.

“We also asked this year a new question about what is driving the highest density deployments,” Uptime research analyst Doug Donnellan said. “When we put the questionnaire for the survey together, I had some expectations that everything AI would be at the top of the list, but here we see that 49% of respondents said that their highest densities are being driven just by standard business applications.”

As they say, the more things change the more they stay the same.

The Hype and the Reality of AI

The fact that AI and the changes it requires isn’t happening at most data centers doesn’t mean it’s not going to happen. Big AI may be “in high concentration in a relatively few sites” now, but the future could be very different, or it could possibly continue to be siloed into data centers that are specifically designed to handle the requirements of big AI workloads.

Brown indicated that figuring out how the market for AI workloads is going to develop is in many ways the current big question facing data center developers.

“Because AI is so new and everybody’s talking about it, this is the challenge for operators all over the place,” he said. “What they’re seeing in terms of reality is something very different than what they’re hearing, and as they look at trying to plan future data center design and data center installations — knowing that it takes at least 18 to 24 months from planning to funding to building to bringing significant capacity online — they’re wondering where things are going to go.

“It’s very difficult for them because if they design for something like 100 kilowatt racks across a large number of racks and they guess too high, they have a lot of spare capacity, a lot of spare equipment that’s going to cost them a lot of money,” he added. “If they guess too low, they’re going to lose a lot of business and they’re going to be playing catch up.

“So it’s the million dollar question that the industry is struggling with right now as to how much of this AI is there really going to be, how big are the installations going to be, how denser are the installation is going to be. It’s something that I know we’re working on and trying to bring some clarity to, but it’s a difficult challenge. It’s something that data center operators are going to be struggling with for for the next few years.”

Tomorrow: Data center sustainability and metrics.

Christine Hall has been a journalist since 1971. In 2001, she began writing a weekly consumer computer column and started covering Linux and FOSS in 2002 after making the switch to GNU/Linux. Follow her on Twitter: @BrideOfLinux