The FOSS Force Linux App of the Week — Alpaca

With Alpaca, managing Ollama models on Linux is easier than ever — no proprietary tools required.

I’ve been using Ollama locally on Linux for some time now. I much prefer using localized AI because I feel more confident that things will be private and my queries won’t be used to either create a profile of me or to train an LLM. That’s why I use Ollama.

Up until recently, I used Msty as my Ollama GUI. Unfortunately, Msty is not open source, and I believe that if there is an open equivalent to an app, I’d much rather use it than the proprietary solution.

That’s where Alpaca comes into play. This open source app — released under the MIT license — is just as easy to use as Msty and also happens to fit better aesthetically with the Linux desktop. Alpaca also happens to have a few more features than Msty (such as image background remover, command line integration, online search, a Spotify controller (for chat-based control of Spotify), and more). One thing to keep in mind is that the command line integration is still in its testing phase, and I was unable to get it to work properly. Even so, Alpaca is a great option.

Installing Alpaca

Alpaca can be installed on any Linux distribution that supports Flatpak. These days, many distros have graphical software managers that support Flatpak apps out-of-the-box with a simple click. Otherwise, the command to install the app on supported distros is:

flatpak install flathub com.jeffser.Alpaca

If installing from the command line, once the app is installed log out of your desktop and log back in to ensure the app launcher is added to your desktop menu. Then click the launcher to open Alpaca.

If you don’t have Ollama installed already, during the setup wizard you’ll be presented with a button labeled Install Ollama. This will take you to the Ollama GitHub page, which includes the steps necessary to install Ollama on Linux. However, I would suggest you install Ollama with the following command:

curl -fsSL https://ollama.com/install.sh | sh

Using Alpaca

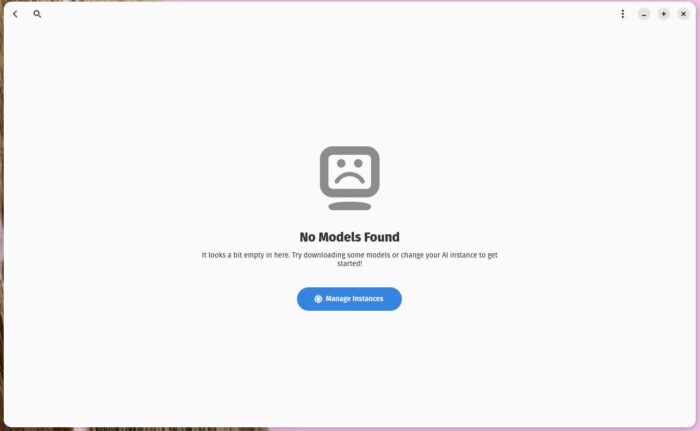

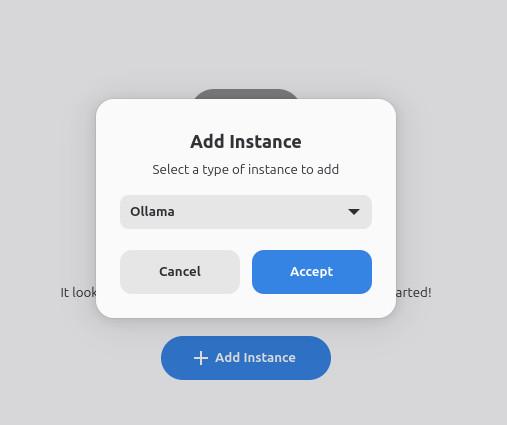

When you first open Alpaca, you’ll be presented with the “No Models Found” warning. Click Manage Instances and in the next window click Add Instance. In the resulting pop-up, select Ollama (not Ollama Managed) from the drop-down and click Accept.

Click Manage Instances and, in the next window, click Add Instance. In the resulting pop-up, select Ollama (not Ollama Managed) from the drop-down and click Accept.

You will then be presented with the Create Instance pop-up, where you can configure the Ollama model to meet your needs.

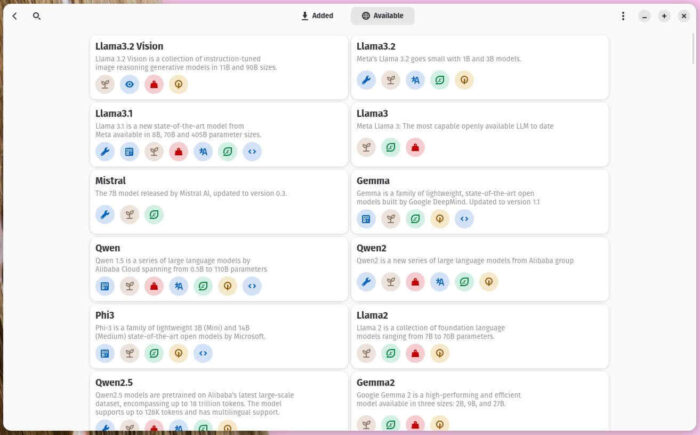

With your instance saved, you can back out of the Instance window and then click Available to view the available models. Click one of the Llama models to use it and then, from the new pop-up, download the version of the model you want to use.

Once you’ve added your model, you can then go back to the main window and start querying.

And that’s all there is to installing and using the Alpaca AI GUI on Linux. Give this app a go and see if it doesn’t become your go-to.

Jack Wallen is an award-winning writer for TechRepublic, ZDNET, The New Stack, and Linux New Media. He’s covered a variety of topics for over twenty years and is an avid promoter of open source. Jack is also a novelist with over 50 published works of fiction. For more news about Jack Wallen, visit his website.